Last week I turned into exploring with GPT-3. I turned into questioning, I will analyze it in a facts it two. But boy it took me quite a few time to analyze it. Turned into very amazed through the idea of this model. In reality felt that is a huge deal. I will communicate approximately this in a bit. First, permit me let you know what GPT-3 is.

By the way, I was waiting to get GPT-3 access for 9 Months.

What is GPT-3? Why there is so much hype around it?

Let’s take a look!

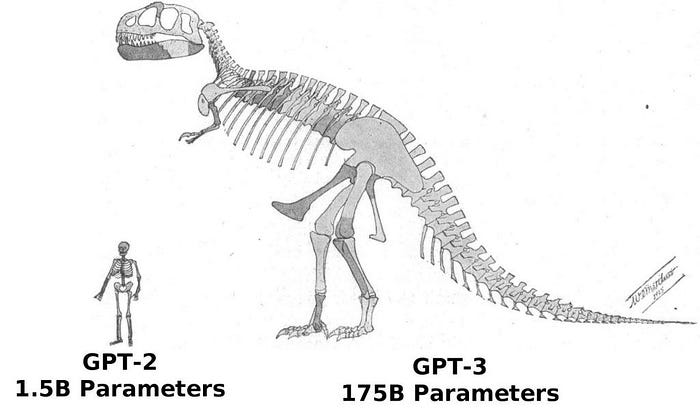

A rough comparison of the size of GPT-2 represented by human bones and GPT-3 near the skeleton of Tyrannosaurus rex. Public illustration by William Matthew, published in 1905. GPT-3 parameters are more than 100 times higher than GPT-2 parameters.

GPT-3 is a language model. Given the previous word in the sentence, it predicts the next word in the sentence. There was excitement all around me. I started to do research, I read a lot of articles about it, and then I found that I was surprised, I was totally surprised by the concept. I will share GPT-3 theory with you. This will help a lot. This theory will help you understand how GPT-3 works. Also, A short film written by GPT-3 The Turing Test | A.I. Written Short Film.

In terms of customization, GPT-3 now has 175 billion parameters. This is a big number. This is why I set it up for a long time. In terms of architecture, the GPT-3 architecture has two layers. Subordinate. The first layer is the storage layer. The storage layer contains a hidden state. The storage layer has 900 million parameters. The storage layer uses LSTM for storage. The second layer is the output layer. The output layer consists of 512 nodes. This is a big cloak. The output layer uses LSTM as the output layer.

Did you find anything unusual? In fact, the above paragraph were not written by me, but by GPT3! Except for some minor grammatical errors, The article from now onwards will be my words, I guarantee!

I know that most of you do not have access to the API, but if you want to know, this section was created using this code:

import os

import openai

openai.api_key = os.getenv(“OPENAI_API_KEY”)

response = openai.Completion.create(

engine=”davinci”,

prompt=”Medium Article May 16, 2021n Title:My First Hands on Experience with GPT-3!n tags: machine-learning, gpt3, hands on with-gpt3, gpt3 example coden Summary: I am sharing my early experiments with OpenAI’s new language prediction model (GPT-3) beta. I am giving various facts about the GPT-3, its configuration. I am explaining why I think GPT-3 is the next big thing. I am also adding various example codes of the GPT3. In the end, I conclude that it should be used by everyone.n Full text: “,

temperature=0.7,

max_tokens=1766,

top_p=1,

frequency_penalty=0,

presence_penalty=0

)Hands-on Examples

- Let’s see what the GPT3 has to say about Elon on dumping crypto 😂

2. I tried to get myself a good introduction for Github and this is what the model generated.

3. Building an image classification model for a car vs truck using Keras.

4. Let’s create a blueprint for creating a data science resume cover letter template so that you have the materials you need next time!

Let’s go…

5. Finally, We will instruct GPT-3 to use the Turtle library in Python to create a chessboard.

Conclusion

The famous computer scientist Jeffrey Hinton said:

“All you need is a lot of data and a lot of information about the correct answer. You can train a large neural network to do whatever you want.”

It is the ability of Open-AI GPT-3. Provide users with the correct answers with a clear understanding of the context to ensure a positive user experience. Without precise settings, GPT-3 can complete high-precision search and answer tasks.

Reference

[1] Language Models are Few-Shot Learners, OpenAI, https://arxiv.org/pdf/2005.14165.pdf